Table of Contents

This article is essentially an update of an older one titled “On the Record: ‘Technical Ability’“, formatted for better clarity and with further amendments that I feel would be useful.

More than a year back, I implemented the new ranking system where both tonal and technical performance were components in determining a headphone/earphone’s overall sound quality, at least within the context of this website. As I’ve stated in that post:

“… as a quick refresher (and I really mean quick, don’t argue with me about what I’m about to say since they’re condensed summaries of 5,000 word articles) tonality is basically tuning and frequency response, while technicalities is an umbrella term referring to unmeasurable aspects such as resolution, transients and imaging.

So, why the change?

From the beginning this system was already being used for my ranking list, just subconsciously and in a more ‘arbitrary’ way. Specifying and breaking down the main criteria of my grading system helps me be more transparent about my inner processes, as well as to help me be more consistent with my rankings.

I’ll probably not break down my rankings further than this since the problem of weighting individual components gets worse the more components I specify. I think there should at least be a certain degree of “abstractness” to the rankings since, at the end of the day, this is a subjective list of personal opinions.”

And that’s where I left things, but there’s a nagging feeling at the back of my head that tells me that I should update my “Technical Ability” article (which is almost 3 years old at this point, originally posted on Head-Fi too!) and also consolidate that with my explanation of tonality (some of which is also deep within the rather lengthy Graphs 101 article).

So without further ado here’s a lengthy ramble about how I, some guy on the internet, review and evaluate headphones & earphones through the concepts of tonality and technicalities.

Tonality

FR, Sound Signature, et cetera

This metric is probably the less controversial of the two considering that it is the most directly measurable aspect of audio, yet may possibly be more controversial considering that it’s governed by the highly subjective phenomena that is… well, personal taste.

Now unfortunately this topic requires a baseline understanding on how frequency response measurements work, so if you’re not yet familiar then do give my Graphs 101: How to Read Headphone Measurements article a good read through. After all, the concepts of tonality and frequency response are inherently linked so to properly understand the former you have to understand the latter.

All set? Here we go.

It’s obvious but I feel like have to re-iterate this: tonality is derived from the word “tone”, hence it is a metric primarily based within the frequency domain. The frequency response of an IEM therefore affects its tonality. It is sometimes also referred to as “tone colour”, though I’d reserve that term for the metric of timbre (explained later).

(This definition is separate from the musical-theory definition of the word which is about the arrangements of pitches and chords. In the audio reproduction context, again, tonality is linked to frequency response.)

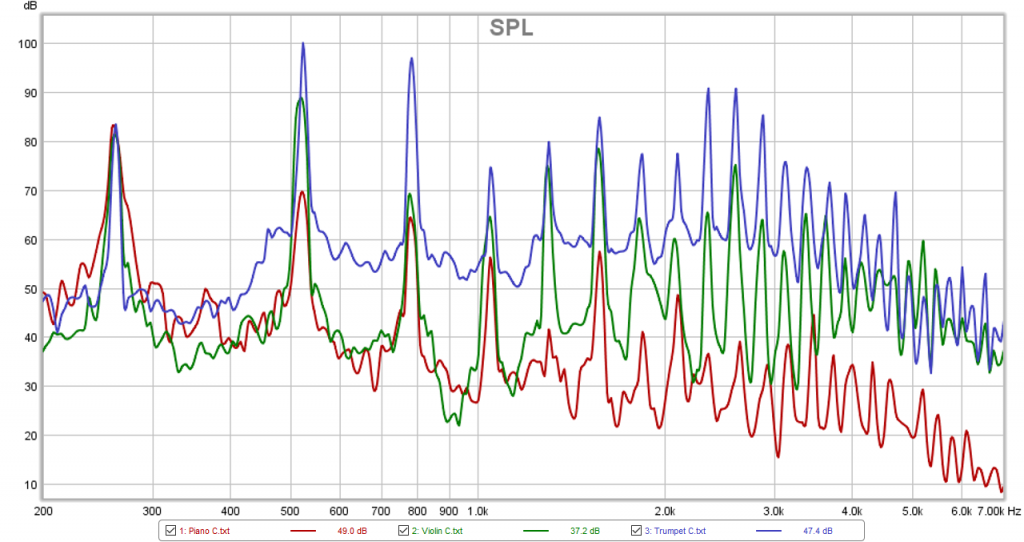

Breaking it down further, the tone of any given instrument is made up of the fundamental frequency (which is also referred to as the fundamental tone, this determines the note in question) and the harmonic frequencies (which is also referred to as the overtones, these give the instruments their unique sound). For instance, a C4 (middle C) note played by different instruments will look as follows on a frequency response plot:

Even though each instrument is playing the same note, the tonality of said instruments are clearly different. And measurable! So for those buying into the myth that it’s somehow impossible to measure harmonics… here you go.

So when someone refers to “tonal balance”, that’s also reference to how accurate an instrument may sound; after all, if the balance between the fundamentals and each order of harmonics are correct, so must be the sound.

From there, there is the debate on what constitutes as “correct” or neutral. Because the concept of neutrality is so fluid and subjective, especially in the IEM world where individual’s own Head-Related Transfer Function (HRTF) rears its ugly head the strongest, tonality therefore also becomes a very subjective metric.

So then, what is neutral?

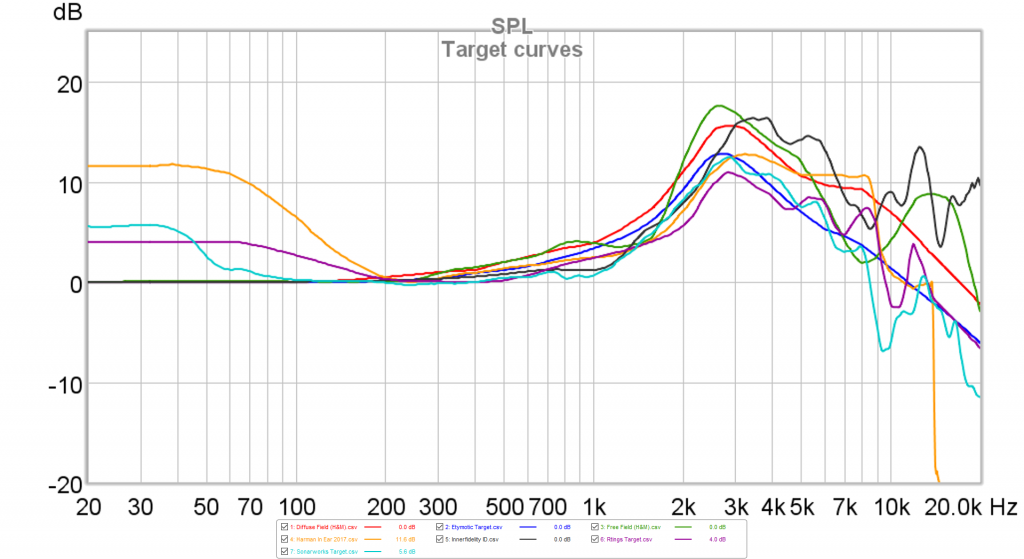

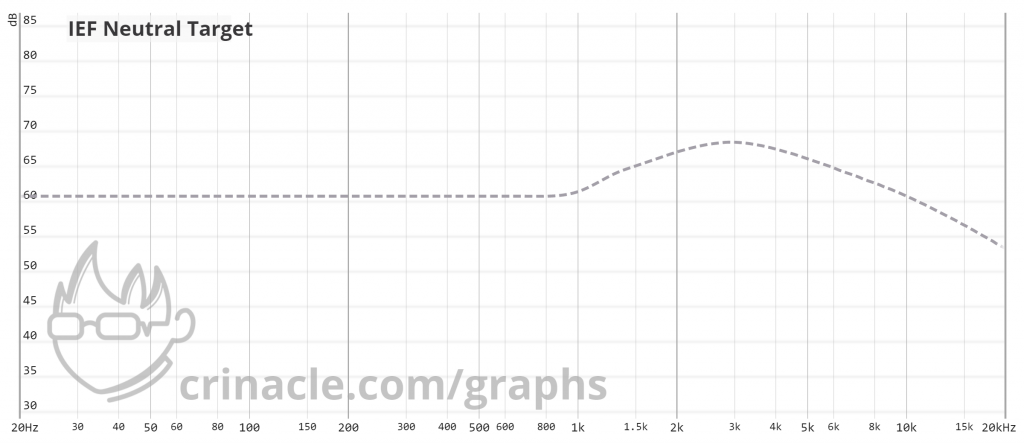

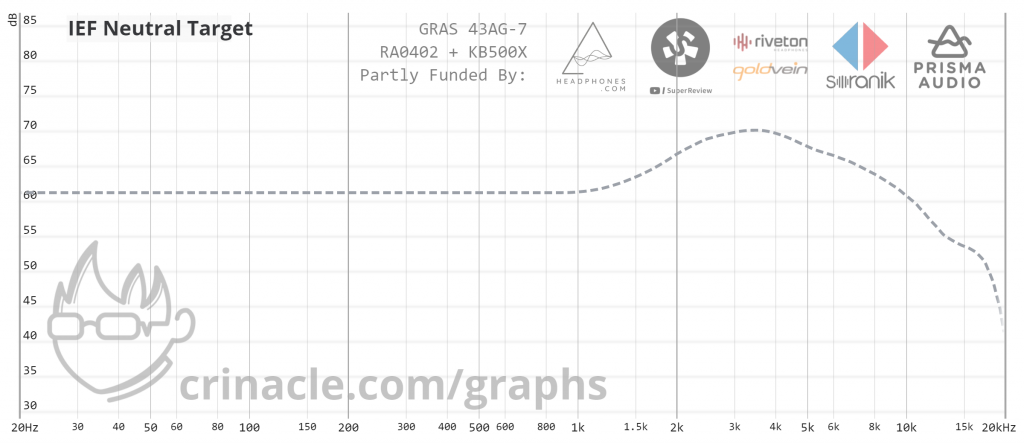

Even within the academic world, the debate rages on. And if the objectivists cannot decide, you can only imagine the chaos that reigns in the anti-graph subjectivist camp, the peace of which exists only by a flimsy thread of “agreeing to disagree”. But as a reviewer it is far more important that people understand my perception of neutrality when reading my reviews, which is what my “IEF Neutral Targets” provide.

So whenever you read a review on In-Ear Fidelity, you can at least be assured that all my tone-related descriptors are done relative to these neutral targets. Transparency is key, and so is consistency.

You hear the term “colouration” getting thrown around a lot but here’s how I see it. When the tonality of the sound gets skewed to any direction, it goes from having a neutral tone to a coloured one. Skewing towards the low frequencies creates a “dark tonality”, while skewing towards the higher frequencies creates a “bright tonality”. Being lower-frequency-biased puts the focus more on the fundamentals and lower-order harmonics, which subjectively gives the instruments some extra richness and heft. On the other hand, being higher-frequency-biased puts the focus more on the higher-order harmonics, which can boost the clarity of the instruments as well as improving the perception of “air”.

Here’s where things get very subjective: how does one determine the “quality” of a transducer’s “tonality”?

Many assume that because I run the world’s largest public database of headphone & earphone measurements, that I rank the tone of any given headphone/IEM based on its deviation of some target curve. While certain target curves are a factor in my rankings (especially my own), what I judge is really on how well a given transducer presents the sound signature it is trying to present.

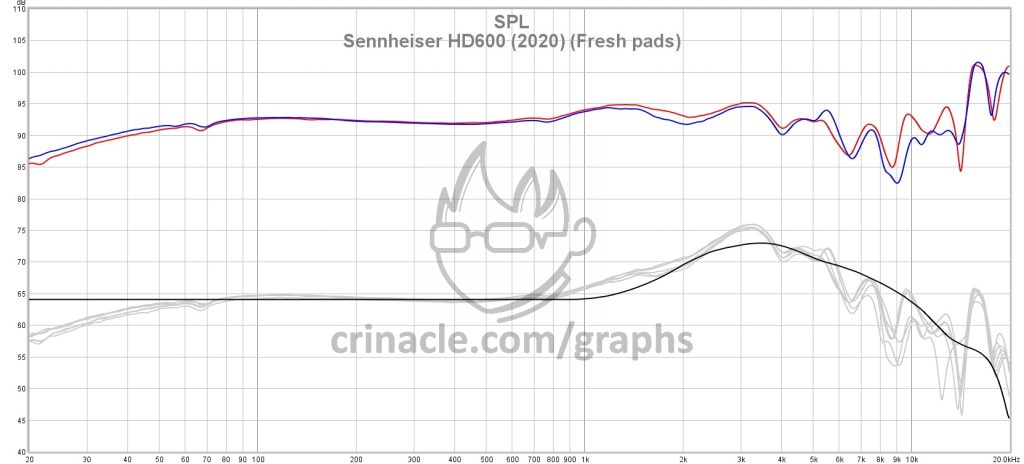

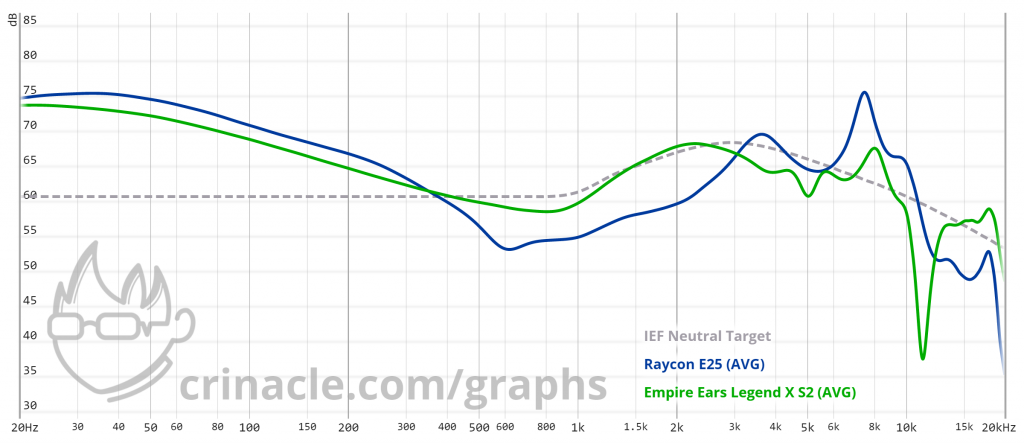

For instance, here we have a headphone that attempts a “balanced” or neutral kind of sound signature, and executes it well:

And here we have… whatever this is supposed to be:

Or perhaps the difference between a well-executed bassy-V and one that goes way too far:

The list goes on and the examples plentiful in both my ranking lists. I try to be as “signature agnostic” as possible in my evaluation of a transducer’s tonality; it should not matter if a manufacturer or consumer chooses to go for any kind of non-neutral signature (or non-Harman for that matter). V-shaped, bassy, bright, dark, warm, and everything else in-between, as long as the desired tonal profile is executed properly the final tone grade should reflect that.

The big keyphrase here is “executed properly“. If a headphone or IEM attempts a wild-and-whacky signature and it sounds horrible, that’s not the fault of my ranking system. Tonality may be up to whims of subjective taste, but even then there are limits.

Technicalities

Immeasurable existence?

But for whatever reason, at least in my own opinion, frequency response isn’t the be-all end-all when it comes to sound quality. There still seem to be some other phenomena going on that affects the characteristics of a transducer, and while not completely independent of tonality still is separate enough to warrant it being its own metric.

What is it then? You may cry. Truth to the matter is… nobody who has personally observed these non-FR differences has a clue, me included. There are theories of course, one of which I’ll debunk myself, but in general there is no real consensus. In effect here is where the objectivist community shun me and I move my butt firmly back on the centrist fence.

Ladies and gents, welcome to the hazy world of “technicalities”.

Do note that whatever I write here is basically pseudoscience in that most of the things here aren’t peer-reviewed or academically researched (or scientifically accurate, for that matter!), more simply being a description of how I personally interpret what I’m hearing and how I assign “markers” to identify how good an IEM is beyond the veil of personal preference and taste.

“Technicalities”, “technical ability”, or “technical performance” is an umbrella term that encompass basically all non-tonal aspects of sound reproduction. Under IEF metrics, it refers to the following:

- Resolution (aka “detail”)

- Transients

- Imaging (aka “stereoimaging”)

- Positional accuracy

- Soundstage

- Timbre (though this involves tonality as well, more on this later)

You can see where the aforementioned “ironic dichotomy” with regards to tone and technicalities come in here; tonality may be the most easily measurable aspect of audio but the interpretation of the measurements is probably one of the most subjective aspects of the hobby. Yet the concept of “technicalities”, while immeasurable and implies complete subjectivity, also implies a certain level of objectivity where more/less of something is always better (resolution, positional accuracy, speed etc.).

I guess I’ll start with the concepts of “transients”, which is linked to both resolution and timbre.

Transients

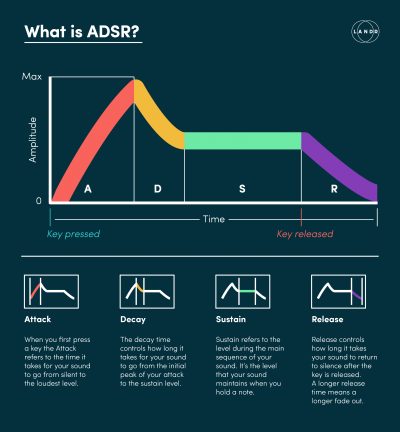

Also known as “speed” in other circles, I personally define transients as comprising of the initial “attack” function and the subsequent “decay” function. In the professional world of synthesisers the term “ADSR envelope” is used, which stands for “Attack-Decay-Sustain-Release”.

I lump the A-D-S parameters into the “attack” term as a catch-all and rename the Release parameter back into decay for a few reasons:

- Given that we’re dealing with audio reproduction rather than audio synthesising, we can assume that the “attack” in the traditional ADSR envelope would be basically instantaneous in a transducer reproducing audio and therefore a fixed variable (and can be ignored).

- The ADSR envelope breaks down the length of the note’s “hit” as how the attack decays into the sustain. For transducers, I think it can be simplified as “length of attack” assuming the above point is implemented.

- Having just two parameters (attack and decay) makes things easier to break down and explain.

Attack

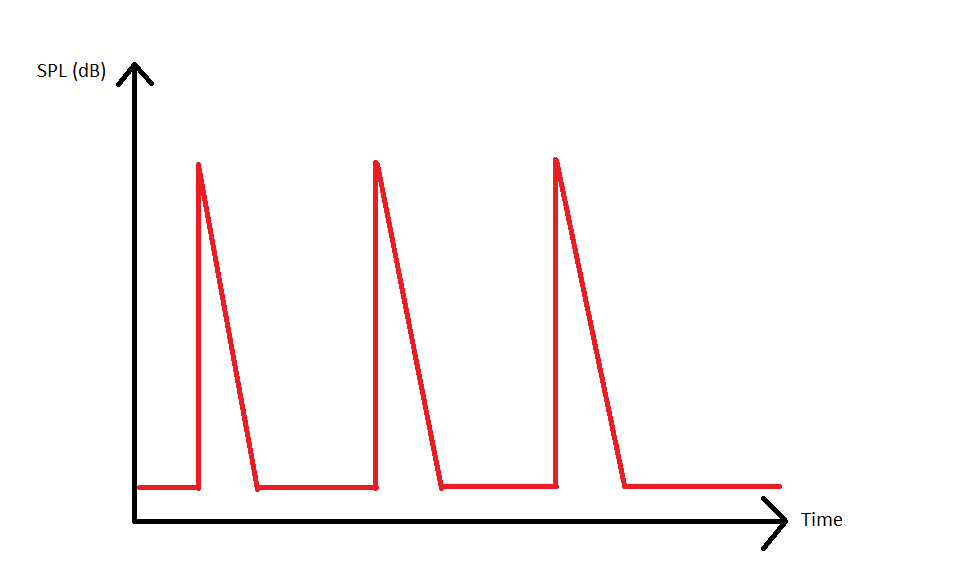

Under the assumption that a transducer (upon receiving the analog signal) will hit maximum SPL instantaneously, the next issue is how long this “point” gets dragged on. Too long subjectively creates this muddy or congested effect. This is what I’d term as “length of attack, “attack length”, “attack speed” etc.. In an ideal situation, the shorter the length of attack, the better.

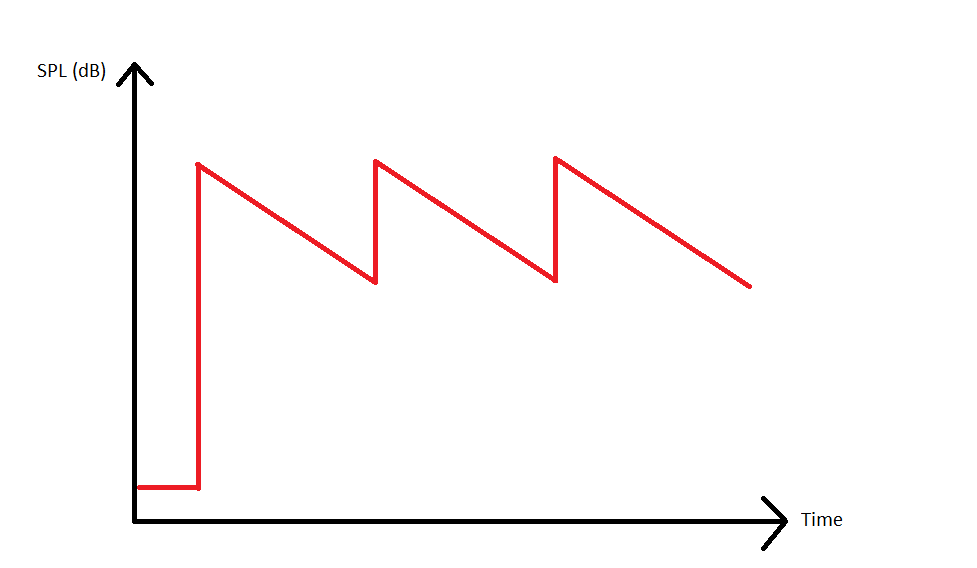

Short (sharp) attack vs long (blunt) attack, shown in 3 notes being struck in quick succession:

It’s hard to describe a “sharp” attack; notes simply come off as clean and well-defined when that happens, and if you don’t have a frame of reference it just sounds “normal”.

However, it’s easy to listen out for “blunt” attack as a lot of low quality drivers exhibit this quality. Plucked strings may come off as muted, percussions come off as banging on pillows; basically any instrument that is heavily reliant on that initial burst of SPL on the ADSR envelope will suffer when played back on a transducer with blunted attack.

Decay

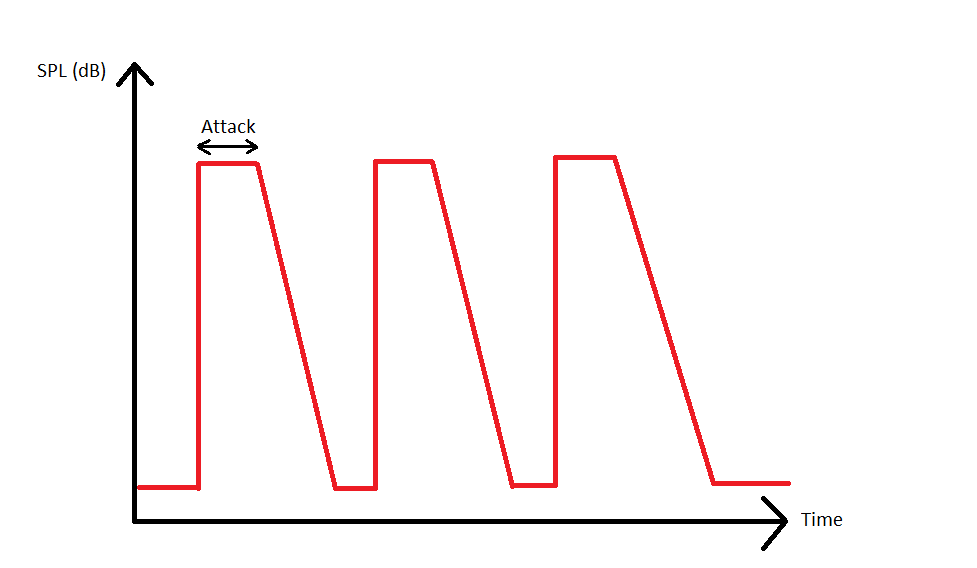

When most people talk about the speed of the driver, they’re usually referring to the attack function. It’s a much better objective metric after all; shorter = better and there’s little room for argument. On the other hand, decay is a much more fickle metric to talk about; of course, too much decay is quite obviously detrimental to the integrity of the sound, but it’s also not like attack where the shortest is objectively better. Decay is one of the things that requires a very delicate balance.

Low decay contributes to the metric of “definition”. When the notes attack fast and don’t have a lot of linger afterward, the notes are much more clearly distinguished and so better defined. However in real life, nothing has “zero decay”. You bang on a drum, the skin continues to vibrate for a short time after it was struck. Pluck a guitar string and there’s still sustain of the note long after you’ve released. Add the effects of room acoustics and post-processing mastering effects and everything we know about what makes a sound “the sound” isn’t just what tone it produces but also the pattern in which it decays.

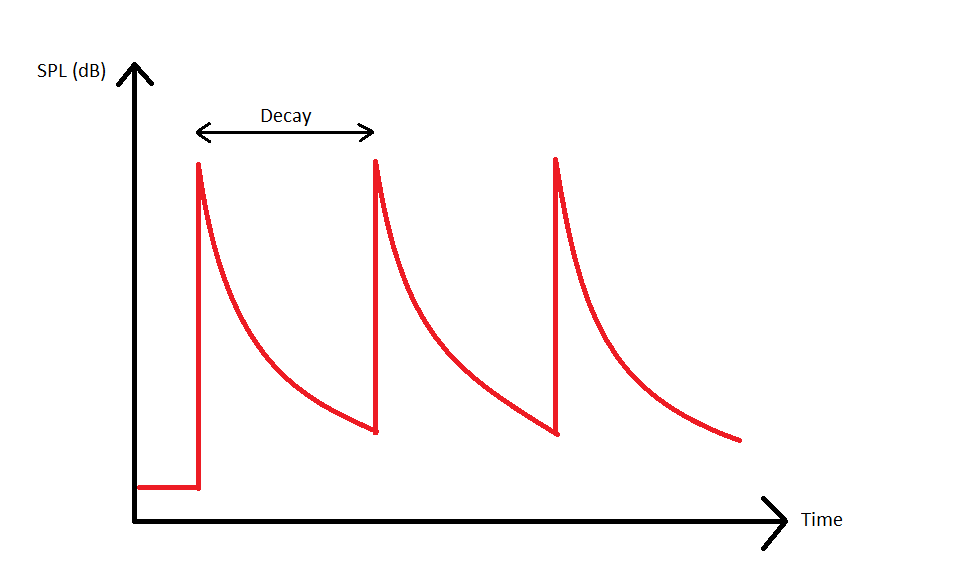

Here are the different types of decay visualised in graph form:

There is a problem in having too little decay; it’s not really representing what you’d probably hear in real life or with a good pair of speakers. Yes, the notes are very well defined but they will sound unnatural. Examples of stuff with short decay are drivers like BAs and electrostats, which have been generally described as having this “ethereal” presence which I would personally attribute to them having way too little linger beyond the initial note. You find yourself wondering if the note was even struck at all because of how fast it disappeared.

Long decay is pretty self-explanatory; you can see that the notes will start smearing into one another and there is very little separation between every attack. Bad drivers are usually the cause of this, perhaps too limp a diaphragm material or too much acoustic resonance without the housing, who knows. The end effect, just like having a long attack, contributes to that muddy/congested sound.

A while back, I mentioned that many theories have been put forth regarding “technicalities” and that I’ll debunk one. Well buckle up, because now I’m about to denounce one of the most popular metrics in the headphone measurements game…

(The case against)

Harmonic Distortion

Why don’t you post distortion graphs? Everyone else is doing it!

Harmonic distortion (or even worse, total harmonic distortion) is, with the exception of fringe extreme cases, virtually useless in the context of headphones, IEMs, and even sources.

For one thing, harmonic distortion at different frequencies behave… differently. So the “industry standard” where distortion values are published @1000Hz are largely useless. Or, god forbid, a weighted average single-value metric like SINAD which is highly reductionist with near-zero correlation with actual listener preference.

For another, not all harmonic distortion is created equal and so publishing a plot of THD against frequency is still too simplified. The effects of auditory masking mean that lower-order harmonic distortion would largely be inaudible especially if the distortion were at lower frequency ranges, and virtually every headphone and IEM out there have distortion profiles that are second and third-order dominated typically under 1kHz.

In short, THD is largely irrelevant and even a full breakdown a headphone/IEM’s distortion profile isn’t going to tell you much since it’s almost guaranteed to be lower-order-dominated anyways.

I myself have actually tried to test this myself out of morbid curiosity; I launched a DAW, manually boosted harmonic distortion and see at which point would I actually start to hear differences. And here are the results:

For a pure 1000Hz tone, I could barely make out third harmonic distortion (3HD) at 3%, and second harmonic distortion (2HD) at a little over 5%.For a pure 200Hz tone, I gave up after not hearing differences past 8% even for 3HD. Didn’t even bother with 2HD, much less lower frequeny tones.- For general music listening, the differences are even more subtle even as harmonic distortion is added to the entire frequency range. It takes a ridiculous amount of 2HD to even begin to hear differences (think 10%+), and even then it’s only obvious for higher pitches and doesn’t at all sound like the “tubey warmth” you’d probably expect.

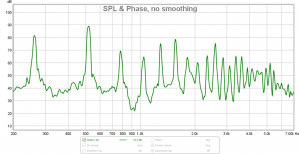

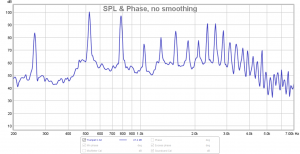

I’ve retested my findings with REW’s tone generator which allows the addition of harmonic distortion. New results below:

- Pure 1000Hz tone

- 2HD: 0.56% (-45dB)

- 3HD: 0.20% (-54dB)

- Pure 500Hz tone

- 2HD: 2.0% (-34dB)

- 3HD: 1.0% (-40dB)

- Pure 200Hz tone

- 2HD: 3.5% (-29dB)

- 3HD: 1.3% (-38dB)

- Pure 50Hz tone

- 2HD: 7.1% (-23dB)

- 3HD: 2.5% (-32dB)

And remember, this is the absolute best case scenario since we’re only dealing in pure tones and not music. Once actual music listening is in the picture, the audibility threshold of such distortions go way up.

What is the general range of THD you can expect out of a headphone or IEM? Sub-1%. And I’m being very liberal with the “1%” value I’ve chosen here considering that it’s usually the peak of the THD typically in the sub-50Hz regions; with the right measurement conditions and a low-noise microphone to eliminate distortion artifacts from the environment, the actual distortion measured from the transducer in frequencies 100Hz and upwards would probably be closer to sub-0.1%. A far, far cry from threshold of audibility, especially once you move away from pure tones and into music listening.

Two conclusions you can draw from here:

- Every other human being is able to pick out sub-0.1% THD in their headphones and differentiate them according to distortion characteristics, and my ears are totally broken.

- THD is irrelevant.

But here’s the thing: don’t take it from me. Take it from actual research into this very topic: the Gedlee papers (paper 1 and paper 2) and research performed by the same team that came up with the Harman Target.

The TL;DR:

- Gedlee’s research puts the correlation between THD and listener rating at r = -0.423. Loosely correlated, but ultimately not a reliable predictor for sound performance.

- IMD correlation is even lower at r = -0.345.

- Listen Inc’s research concludes that “none of the headphone distortion measurements could reliably predict listener preference based on audible distortion”, in reference to the metrics of THD, IMD and multitone distortion.

- Both researches have suggested alternatives to the traditional distortion measurements based on their results; Gedlee with the “Gedlee metric” and Listen Inc with non-coherent distortion.

I will not comment if they are effective as that is a separate topic altogether. - Both researches make mention of the phenomena of auditory masking as a reason for the irrelevance of THD, with Gedlee even calling it “intuitively obvious”.

Due to all these reasons, I won’t be publishing distortion measurements. I encourage others in the measurement-publishing field to move away from harmonic distortion as well so as not to mislead the general populace, but it’s a free world.

The Overlaps

Where the worlds collide

While tonality and technicalities are largely separate, there are some ways in which each influence one another. After all, frequency and time domains meld together to form what we all perceive as sound.

Timbre (pronounced “tAm-buh”)

At least within the context of IEF reviews and in my own personal definition, timbre is tonality with time domain characteristics added on, more specifically decay. Or even more specifically, the pattern of decay. Each instrument decays differently so usually an IEM that excels at one particular style of instruments (bowed strings, plucked strings, percussions, vocals etc.) can’t be expected to perform as well for others.

As mentioned in the Decay section, an IEM needs to strike a balance that works with as many instruments as possible since they can’t make use of room acoustics or the acoustics of one’s own body to create timbre. Though in practicality, what happens is that you sometimes get drivers that inherently has a timbre “flavour”; for instance:

- Plastic timbre: Characterised by a hollow sound, sometimes also describes as a certain sense of weightlessness in the notes. This sometimes happens when the decay is unusually fast though is also exacerbated by a higher-frequency-biased tonality. Balanced armatures are its biggest offenders.

- Metallic timbre: Not necessarily due to an abnormally long decay but rather a ringing (AKA pulsing or oscillating) decay pattern. Misplaced peaks in the treble can also cause this, though the metallic effect can also manifest itself in baritone and bass instruments depending on severity. Anecdotal examples of IEMs with metallic timbre include the Dita Dream, JVC HA-FD01, even the venerable Focal Utopia.

Temperature

Also known as warmth or lack thereof. What affects how warm an IEM is is combination of transients and tonality, though moreso on tonality. To generalise very broadly, an IEM with lower-frequency-biased tonality is more likely to have a lot of warmth while an IEM with a higher-frequency-biased tonality is less likely to have warmth. This likelihood is boosted by the length of decay, wherein more decay translates to more warmth in theory.

This all within a certain range of course; decay beyond a certain point wouldn’t sound warm anymore but rather turn into mud though there seems to be some amateurs equating warmth to the muddy effect.

On the other side of the spectrum, I don’t really like to use the term “cold” since it denotes a particularly negative connotation in itself; I prefer to go by a scale that starts with “mud” at the worst, “warm” somewhere beyond that, and finally “room temperature” that denotes a lack of warmth. For instance some might describe something like the ER4 as cold; I’d personally just say that it doesn’t have a lot of warmth.

Neither the presence nor absence of warmth are objective “good”s by themselves, only how well it plays with one’s personal preferences.

Texture

Texture is mostly derived from transients, in particular the shape of the attack and length of decay.

When there is enough decay (though again, not too much), the notes can overlap and so created a “smoothed” effect. Though, it is also possible to be both high-definition and smooth if the transducer strikes the balance appropriately. As mentioned, an IEM that is too smoothed has too much decay, and so the aforementioned detriments of long decay kicks in. On the other hand, a textured sound comes from low decay, each note well separated from the other. Too much texture results in the grainy effect, as well as the usual shortcomings of being unnatural.

On the other hand, smoothness and graininess might be associated with tonality, or more specifically harmonic distortion. Even order distortion is generally pleasant as they harmonise on whole octaves and can create this smoothed, “musical” sound. The “tube sound” is commonly associated with second order distortion, hence giving it their distinct signature. Odd order distortion is generally considered to be destructive due to their relative non-relevance to octave harmony, often being described as giving a fuzzy or grainy effect. As balanced armatures have consistently demonstrate dominating third-order distortion (some as severe as 1% as opposed to the usual of 0.01 or lower), this could be an explanation for the grain (or texture) that some hear on BA IEMs, though I’m not optimistic.

(And yes, I understand that all this is hypocritical of me just as I finished denouncing the evils of distortion measurements.)

Just like temperature, texture is not a measure of objective performance and is all personal taste. However, going too much in any direction (too smoothed, too textured) can be objectively bad and so can kick in as a negative metric for technicalities.

Cited works:

Geddes, E. R., & Lee, L. W. (2003, October). Auditory perception of nonlinear distortion-theory. In Audio Engineering Society Convention 115. Audio Engineering Society.

Temme, S., Olive, S., Tatarunis, S., Welti, T., & McMullin, E. (2014, October). The correlation between distortion audibility and listener preference in headphones. In Audio Engineering Society Convention 137. Audio Engineering Society.

Afterword: Yes I know that scientifically speaking, transducers such as headphones and IEMs are (generally) minimum phase devices. Whatever exists in time domain will be reflected in the frequency domain for these transducers, so all this talk about transients and time-domain are technically completely inaccurate in a truly objective sense. However, I can’t really come up with an alternative for the phenomena that I’ve experienced over the years that I’ve always attributed to time domain stuff, so all the things I’ve talked about here are essentially placeholders terms for the time being.

Cheers to the next few audiophiles who will publish the next big thing in headphone acoustic science, and hopefully prove me right.

Support me on Patreon to get access to tentative ranks, the exclusive “Clubhouse” Discord server and/or access to the Premium Graph Comparison Tool! With current efforts to measure more headphones, those in the exclusive Patreon Discord server get to see those measurements first before anybody else.

My usual thanks to all my current supporters and shoutouts to my big money boys:

“McMadface”

Man Ho

Denis

Alexander

Tiffany

Jonathan

23 thoughts on “The Tonal-Technical Dichotomy: The IEF Evaluation System”

Yeah, I think your ears are broken. I just tested myself and on a 1k sine I can easily hear third harmonics down to -55 (0.2%) and second harmonics down to -50 dB (0.32%).

For 200 Hz: 2HD at -36 (1.6%) and 3HD as low as -52 dB (.3%).

I tested with REW’s generator function, phase of the harmonics was 0 degree. Headphone I used was the AD1000X.

I can also clearly hear the grating distortion of my XBA H3 around 4 kHz (IIRC at ~1%).

You should be careful with blanket statements like “THD is (largely) irrelevant” and recommendations like “yeah, just completely ignore non-linear distortion becuase it’s just gonna mislead the general populace lul”.

Interesting, I didn’t realise that REW had a harmonic distortion option. I tested it out on REW again and I’m getting similar results to you as well.

Might be the VST plug-in that I used previously was fudged on pure tones, but my point still stands on harmonic distortion being irrelevant in the context of headphones and IEMs playing back music. It’s definitely far easier to pick apart differences in pure tones, whether it being distortion or even volume changes.

At any case, let’s just ignore my own tests and look at the research that I’ve cited; THD (IMD and multitone too) is largely irrelevant when it comes to predicting listener’s preference. So if I (or anyone else) were to create a database of THD measurements and rank products accordingly… it would indeed be misleading.

I actually agree with you and the research that distortion is indeed pretty irrelevant but I still think it is an interesting datapoint because when looking at an individual system you can’t apply the broad rule of headphones having no distortion.

I really liked rin choi’s distortion presentation where he measured at 100dB @1kHz and cut the graph of at a realistic .1% instead of having it go to .0000001%.

If there is nothing above 200 Hz you can say pretty confidently that it doesn’t matter. However if there is a broader elevation like on the H3 it can indicate a fuccy wuccy.

For the bass distortion you can point to REW so everyone can test themselves to see wether 4% second harmonic at 20 Hz matters (it doesn’t). The research says that if harmonic distortion is inaudible then IMD is aswell.

I’m not proposing that you should take the average THD value into a ranking because I’ll be the first to agree that it is misleading. I just think it would be neat to have another squiggly line to look at and interpret.

REW has a stepped sine function in the rta window. 1/6 octave steps and a decent fft size with some averaging are good enough to get decent results even in somewhat noisy environments, additionally aided by 100dB giving a better signal/noise ratio than the 94 from IEC 60268-7.

You are not right to distortion. This is a purely technical estimate. It simply means that the headphones do not correctly transmit tone. You may not hear it, but the cry can feel. Almost half of the measured headphones has a low estimate for distortion:

https://www.rtings.com/headphones/tests/weighted-harmonic-distortion

But the overal estimate is not important, it is important precisely behavior of distortion from frequency, since in some cases distortion, for example, at low frequencies can be very large. If I see big distortion, I understand that these headphones are made technically not true.

Rtings uses weighted harmonic distortion, which is better than straight-THD though I’m still skeptical on its correlation with listener preference.

And as I’ve mentioned in the article, THD is largely irrelevant except in extreme fringe cases, which fall under “big distortion” (>5% I presume?). But if we’re talking about a headphone with 0.01% THD versus 0.1% THD, that’s where the research (and my own anectdotal experience) say that THD is a poor predictor of sound performance.

The difference in the cost of headphones with THD 0.1% and 0.01% can be very large. The sound quality of THD also does not affect IMHO. But THD has great importance to the detail and on stage. Imho on THD and Waterwall can be indirectly evaluated by ADSR. In any case, thank you so much for an interesting article.

Interesting how you relate things to attack/decay, I never thought about that. Like relating plastic timbre to fast decay + bright tuning reminded me instantly of the Sundara, as it has all 3 of those things. You might be right.

very nice writeup. i don’t agree with the “logic” behind your thd conclusion. just because you don’t hear it doesn’t mean it can’t be true (although it still probably is true, haha). but that logic… 😉

also, texture can also be dependant somewhat on treble response and quality. sharp upper treble peaks or simply more upper treble can give a rough texture to the tonality. while less upper treble and more lower treble (1-4k)can give a smoother textural quality.

anyhow, you strike a good balance of objective and subjective impressions and that’s some good info on decay. and why I generally prefer DD myself. but it depends on many factors just as you say… keep up the good measurements and reviews…

Crinacle, a little bit out the subject. But regarding technicality, which one is more proficient, the ex1000 or the iSine 20.

I think distortion is noticable when you try to eq

Especialy when adding subBass when it below neutral, sounds muddy

I’m pretty sure you’re misusing the term ‘attack’ here. Regarding synthesisers or compressors etc… attack is the upward slope from nothing to ‘peak’. You acknowledge this is always practically instant with the drivers of IEMs.

What you’re actually describing regarding technical ability is sustain. I think you’re confused because an ADSR envelope has the extra ability to maintain a tone before it releases that isn’t necessarily the peak, and therefore experiences a ‘decay’ section from the peak to the plateu of the ‘sustained’ section as shown in the ADSR figure you provided.

You can alter ADSR envelopes to completely remove any ‘decay’ section so that the sustain itself is the peak section maintained for however long you set the ‘sustain’. In this regard, the ADSR envelope will reflect the shapes you display regarding the graph slow and fast transient IEMs. Here, you are comparing how fast / slow the sustained peak is, length of ‘sustain’, not attack. What you then describe as decay is actually then release, which as mentioned earlier is different from decay, especially when you assume shapes where the decay is zero (again as indicated in your IEM transient comparrison graph).

Great article though Crinacle, just leaving my 2 cents having experience with synthesizers / music production.

Nevermind, I notice you have addressed this in the article. Derp. My bad.

That said, I still worry about misappropriating technical terms for ‘casual’ descriptions. It can not only warrant confusion among those in the industry who are used to alternative definitions but are we absolutely sure that the ability of an IEM to produce sound from silence to peak is practically instant and beyond the sensitivity of the human ear to effect texture / timbre? ‘Attack’ in its technical sense may have a use in terms of description but that will get confusing when we are describing attack for the body of the sound before it decays (e.g. either sustain or the entire A-D-S portion of the envelope.

> are we absolutely sure that the ability of an IEM to produce sound from silence to peak is practically instant

Not quite, that’s more an assumption to simplify the breakdown a little more. Kind of like “ignoring air resistance”, but for audio.

The Attack-Decay explanation for transducer transients would get a little confusing for those who are familiar with the ADSR envelope yes, but I feel like it’s the most intuitive way to get my point across. I also considered “A-D-R” which is the ADSR concept with sustain out of the equation, but feels like that would get really complicated to explain and harder to reference to in reviews.

I’m glad I read your explanation in this article of how you use the terms “attack” and “decay”.

As someone who is familiar with ADSR, I previously assumed that your use of these terms was with that frame of reference. Now that I know you are using them differently, I am equipped to interpret your reviews more accurately. Thanks.

If only every reviewer was this transparent.

This is an excellent piece. You bring a good balance of evidence and opinion, and identifying which is which. Admitting weakness and contradictions in your writing is refreshing.

I’m a long-time reader but first-time poster. Keep up the good work for the benefit of us lurkers.

Is it true that the ideal tonal curves are a result of historical coincidence? The producers making the music are equalising a piece to make it sound good for, say, the Harman curve, but they could’ve theoretically made it sound good for a perfectly flat curve or the “And here we have… whatever this is supposed to be”-target curve, or any other, then getting to a critical mass of producers, thus forcing equipment manufacturers conform to said curve.

And if that is so, it makes me wonder about which curve is the easiest for the manufacturers to make. Is there some curve, the adoption of which would lead to cheaper audio equipment?

Most music is mixed on nearfield studio monitors, which are supposed to measure flat. “Speaker-flat” is far more intuitive than “headphone-flat”, that is to say simply a straight line on a free-field microphone. This doesn’t mean that said music will only sound good on flat studio monitors (the Yamaha NS-10 is a legendary monitor notorious for NOT sounding great for music listening), but rather the philosophy of “if it sounds good on these monitors, then it should sound good on most other playback systems”.

The Harman target is based on the assumption that the listener is listening to professionally-mastered music, i.e. what I’ve written above. I’m sure that if a studio were to mix on Harman-tuned speakers, then the ideal playback setup would consequently be a pair of dead-flat studio monitors.

Now, one could mix music on a transducer with wonky tonality, but then the philosophy changes to “it sounds good ONLY with the monitors that the studio use, and horrible on everything else”. Clearly not ideal in these cases.

Two years later, I would just like to add that the Harman Curve was published in 2012, so there was no history of music being mixed to that target.

I suspect that if you don’t care for a transducer following the source signal more accurately, you may want to look at your sources and amps used for listening. Frequency response will be somewhat subjective due to open ear response and channel mixing at lower frequencies (why a rising low end seems flat on earphones) with speakers but a proper recording/amplification doesn’t need ‘enhancing’ from the transducer unless it’s already lacking. Once the response is close to correct for proper balance things shouldn’t sound overdamped unless there’s compression (overdamping) on initial response. Perhaps take a look at that aspect of BAs. I do tend to find open back BAs sound more right in the bass but not so much due to artificially lengthened decay but instead a lack of being overdamped in general. Something seemingly not at issue in other ranges.

Relatively new to this sector, but from what I understand, is it correct to say, theoretically and scientifically, irrespective of what drivers and combination of drivers and other variable things, if you can get two IEM’s or two headphones to match the same frequency response curve say the harman target, then they should sound the same?

First of all, congrats for an excellent article. Well researched & documented and very useful additions from your personal experience and testing.

OTOH, I would disagree with your conclusion that the distortion measurements do not matter. Yes, the THD-number is useless and not correlated to audibility/preference. Already well studied and we can take it as a fact.

However, the harmonic distortion spectrum is not the same as the THD and quite relevant AFAICS. It very much matters for Timbre, see https://en.wikipedia.org/wiki/Timbre#Harmonics. Partially matters for your concepts of Tonality/Temperature/Texture too. (also not so sure about your terminology, timbre/tonality/temperatire seem to be ~the same thing).

One example: what you call “Plastic Timbre” of the BA drivers seems to be mostly an effect of the HD3, see https://www.superbestaudiofriends.org/index.php?threads/why-balanced-armature-drivers-suck.11485/. Many other have (generally) described the dynamic drivers are more round/natural and the BAs as more precise/plasticky and that’s exactly how the diffs between H2 and H3 are described by most people/studies.

You also seem to neglect one effect: higher HDs are progressively more audible. Yes, the H2 is hard to hear and the literature puts the audibility around 0.1% for the most sensitive low-kHz range (pretty much same ballpark as your own testing). But that is just the least audible H. The H3/4/5/6 are progressively more audible and you can see that in your own tests: you heard the H3 8-9dB below the H2. That effect continues and H5/7/9/etc will be audible at much lower levels. And they quite often measure above audibility thresholds, especially at high SPLs.

IMO, you should add the kind of HD spectrum measurements that ASR & Archimago have.

https://www.audiosciencereview.com/forum/index.php?attachments/aurorus-audio-borealis-headphone-distortion-thd-measurement-png.263262/

https://1.bp.blogspot.com/-ExiBHnhauBs/X2oTUlwwiJI/AAAAAAAAX9I/KYUr_B4WTd4z7WusALPwYTXAAJk8Edk9QCLcBGAsYHQ/s1600/RME%2BADI-2%2BPro%2BFS%2BR%2BBE%2B-%2BFrequency%2BStep%2BHarmonic%2BDistortion.png

The ASR graphs might be too simple with just H2/H3 and Archimago’s a bit too busy up to H9. Up to H5/H7 could be a good compromise.

Yes, a lot more work for you but just saying that it does not matter it’s not exactly right. Sounds more like you chose an easy cop-out 🙂

Why can’t people quantify transients by measuring the impulse response? This is done with speakers, why not IEMs?