Table of Contents

So… a lot of rating systems kinda suck.

Yes I know that it’s rich coming from me, the guy who practically made his living through glorified tier lists. But here I’d argue that, just like putting multiple drivers in an IEM, it’s problem isn’t the concept but rather the execution. Skews, biases, general disregard for scaling, all of which results in practically unusable distribution curves and so unreliable ratings

Disagreements about individual reviews aside, I’m not here to bash differing opinions. This is an article purely on the execution of rating and ranking systems of various websites, though of course also critiquing the overly-positive vibe of many publications that may be leading our hobby to its downfall if not corrected.

For a full breakdown of the data used in this analysis, refer to this spreadsheet.

TL;DR

WhatHiFi

WhatHiFi is possibly the largest audio-focused review site out there today, and their influence cannot be underestimated. While we enthusiasts may shun them and laugh at their articles more than take them seriously, it’s no secret that mainstream media basically treats their word as gospel.

But disregarding personal opinions on the site itself, there is one thing we can use to objectively judge their own judging system: math. WhatHiFi uses a fairly common 5-star rating system, though unlike most others using a star-based system WhatHiFi does not use half-stars. 1, 2, 3, 4, or 5, nothing in-between.

Many non-enthusiasts consider a 5-star rating from WhatHiFi (or even the 4-star) the ultimate endorsement, perhaps the ultimate proof that a product is truly worth every cent the retailer asks for. But how special is it exactly?

The data collected is based on WhatHiFi’s headphone and earphone reviews from October 3rd, 2019 till today, for a total of 90 data samples.

| Rating | Count | Population | Top % |

| 5 star | 32 | 35.56% | 35.56% |

| 4 star | 35 | 38.89% | 74.44% |

| 3 star | 22 | 24.44% | 98.89% |

| 2 star | 1 | 1.11% | 100.00% |

| 1 star | 0 | 0% | – |

If your product was awarded at least a “coveted” 4-star, congratulations! It means basically nothing.

Even the 5-star award doesn’t mean much considering that it represents the top 35% of awards, which could mean anything between “best of the best” and “above average”. To put it all in context, even the 3-star award (which would be a 60/100 on a standard century scale) is a rarer award compared to the 4 or 5-star at only 24% of the total awardees, while the 4 and 5-star awardees combined make up a whopping 74.44%.

Basically, with WhatHiFi you have to really read between the lines. Statistically speaking, a 4-star WhatHiFi award is average. You’d have a better time ignoring everything that they didn’t award 5-stars because 4-and-below essentially represent mediocrity, and I’m sure the average consumer wouldn’t want that.

So looking at WhatHiFi’s rating system from a more abstract point of view, we see the following:

- 2 out of 5 possible ratings effectively unused (1-star and 2-star)

- A heavily-positive skew in rating distribution

- 74.44% of awardees occupying the top two possible ratings (4-star and 5-star)

Mathematically speaking, this is a garbage performance scale. I wish my schools graded me like this.

SoundGuys

Probably the runner-up for the title of “most popular audio-centric review site”, SoundGuys is another that holds massive sway over the mainstream audio market. They’re arguably better than WhatHiFi too; for one thing they make use of and publish their own frequency response measurements, which puts them above the pack of many audiophile-oriented review sites in my books.

That said, their measurements are pretty inconsistent and they still refuse to disclose their rig they’ve now disclosed that they use a B&K 5128 for measurements, though all that’s a separate topic altogether.

Now in terms of their rating system, SoundGuys does away with the (frankly antiquated) 5-star system and instead goes for the out-of-10 system, rounded to one decimal point. Now this gives a lot more flexibility and leeway to properly set defined boundaries and “leagues” between performance levels, assuming that one uses the entire scale.

ASSUMING THAT ONE USES THE ENTIRE SCALE.

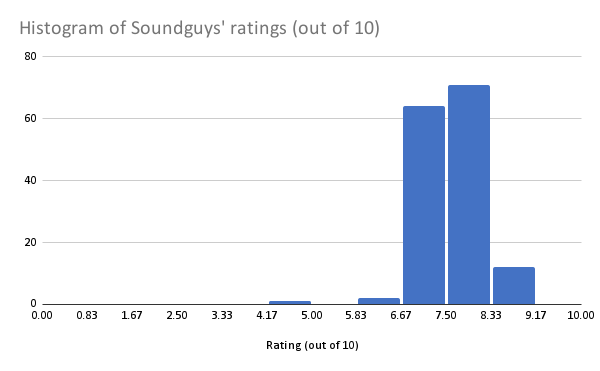

The data collected is based on SoundGuy’s most recent headphone and earphone reviews, up to 150 data samples.

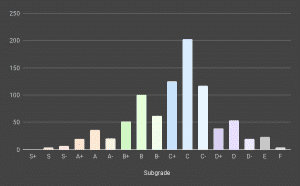

Here we have a prime example of scale inefficiency. A full 10-point scale, yet a vast majority of entries distributed between 7 and 8.5. Absolute madness.

Quick question: out of 10, what would you reasonably expect “average” to sit around? A 5? Maybe a 6?

| Average | 7.56 |

| Median | 7.5 |

| Top 10% | 8.3 |

| Top 25% | 8 |

| Top 50% | 7.5 |

| Top 75% | 7.2 |

| Max score | 9 |

| Min score | 4.4 |

How about 7.5.

Not even that, a score of 7.2 would put a product at the top 75%. Two-thirds of what SoundGuys have reviewed recently have been awarded at least a 7.2, which under a reasonable performance scale would be fairly excellent. Hell, me in university would’ve killed for a 72% average.

This also compounded with the fact that SoundGuys seem to be deathly afraid of awarding anything above a 9.0, which could give them the appearance of having higher standards but also doesn’t do much when they’re also deathly afraid of awarding anything below a 7.0. Effectively, the SoundGuys rating system is extremely constrained to the point where a single .1 rating difference represents far too huge a shift in actual performance.

So, while I prefer the use of an out-of-10 system over the 5-star rating system, at least WhatHiFi is willing to use 60% of their scale. SoundGuys on the other hand, barely 20.

Headfonics

While the other two sites are fairly well-respected by the mainstream but not so much in the audiophile community, Headfonics is one that is backlinked quite a bit by numerous audiophile forums like Head-Fi, even being the sponsor of many CanJams. Like SoundGuys, they use an out-of-10 rating system.

So it only stands to reason that one of the audiophile review sites would have a rating system that isn’t completely skewed and biased, and shows that audiophile reviewers have far higher standards than our mainstream counterparts… right?

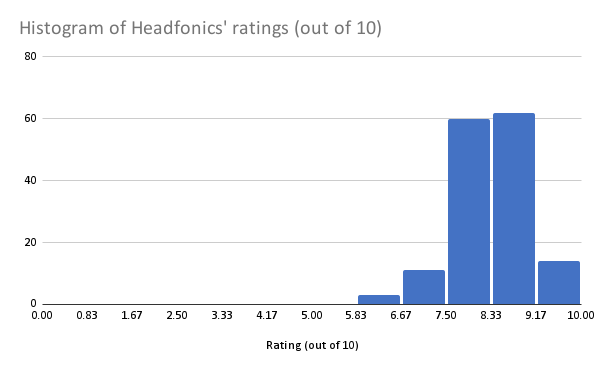

The data collected is based on Headfonic’s most recent headphone and earphone reviews, up to 75 data samples for each type for a total of 150 data samples.

| Average | 8.332 |

| Median | 8.4 |

| Top 10% | 9.1 |

| Top 25% | 8.7 |

| Top 50% | 8.4 |

| Top 75% | 8 |

| Max score | 9.9 |

| Min score | 5.9 |

If you thought SoundGuys was bad, you haven’t seen anything yet.

What kind of screwed-up performance scale has the average set at 8.33/10? I’m fine with the top 10% being at 9 and above, but not when the top 75% is… 8/10?!

I’m honestly ashamed that an audiophile review site would have a rating system that’s far more skewed and biased that anything I’ve seen from mainstream media. Basically nothing is under 6/10? Really? We’re supposed to have higher standards as enthusiasts, not lower!

Look, the data speaks for itself. If everything is “good”, nothing is.

Headphonesty

Headphonesty’s a bit of a mixed bag when it comes to the editorial team. On one hand, you have extremely knowledgable contributors who go out of their way to learn more about the hobby as a whole, and as such put out some of the highest quality articles I’ve read in the portable-audio industry.

On the other hand… well, again, topic for another time. Regardless, Headphonesty is undoubtedly one of the more popular of the audiophile sites with the traffic to back it up, but as we’ve established popularity is no measure of competence. Like WhatHiFi, Headphonesty also uses a star rating system though allows for the use of the half-star, thereby increasing the number of ratings that can be awarded from a paltry 5 to a comfortable 10.

So they’ve used a slightly more flexible rating system. But have they really made use of it?

The data collected is based on Headphonesty’s most recent headphone and earphone reviews (only those using the star rating system), up to 150 data samples.

| Average | 3.8 |

| Median | 4 |

| Top 10% | 4.5 |

| Top 25% | 4.5 |

| Top 50% | 4 |

| Top 75% | 3.5 |

| Max score | 5 |

| Min score | 1.5 |

Well… it’s not great, but after the previous trainwrecks this looks reasonable by comparison. Don’t get wrong, a median of 4 on what is basically an out-of-5 rating system is still indicative of some major skews and biases behind the scenes, but at the very least it seems that the reviewers at Headphonesty are willing to go below a 3-star rating if need be.

Next to WhatHiFi’s 4-star-being-top-75%, Headphonesty has the top 75% at… 3.5 stars. An improvement, but in a vacuum this is still a terrible scaling system. C’mon guys, be a little less trigger-happy with the 4s and 5s. Not everything can be great.

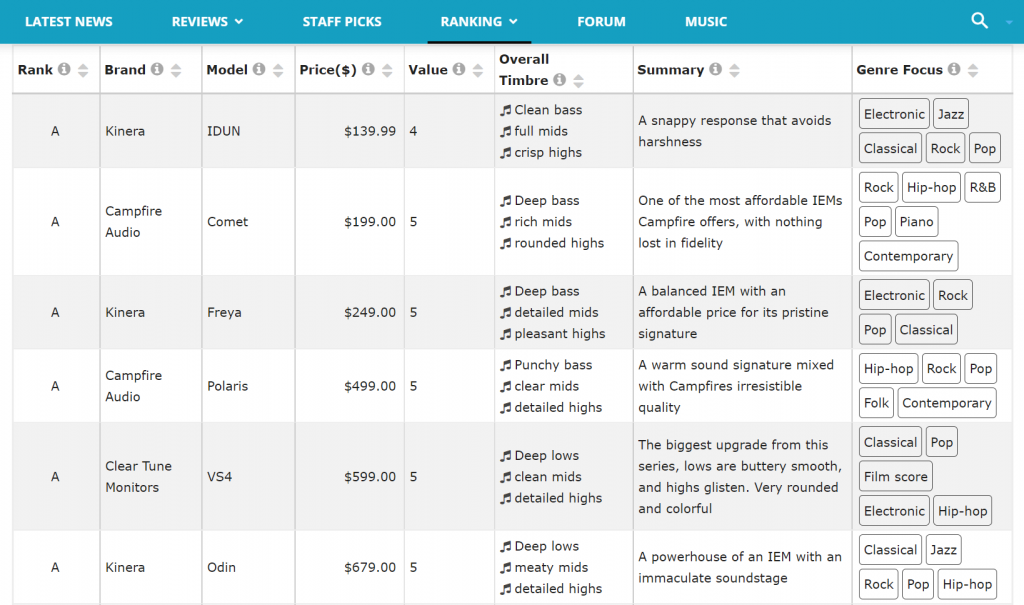

MajorHiFi

MajorHiFi is a review website owned by Audio46, which is an audio store based in New York City. MajorHiFi isn’t that popular especially relative to the other four (hell, relative to In-Ear Fidelity too) but I’m adding them into this analysis because they’ve made ranking lists of headphones and earphones like a certain someone.

Really MajorHiFi? Even calling them “Ranking Lists”? At least use a different description. Tierlists are also a thing.

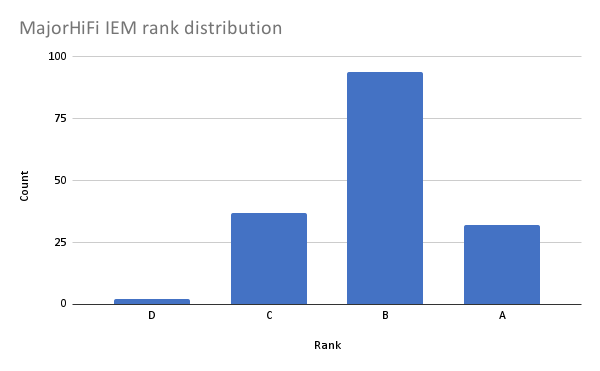

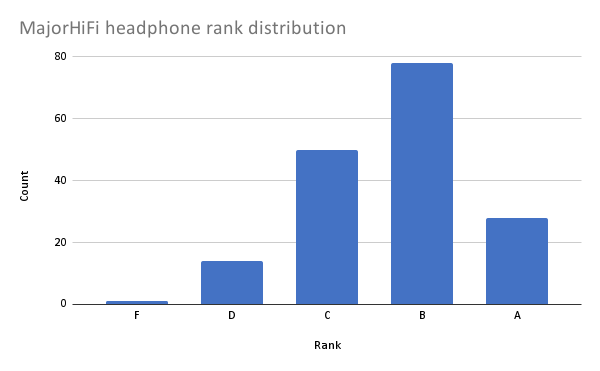

The data collected here is everything that was displayed on MajorHiFi’s respective ranking lists.

| IEM Rank | Count | Top % |

| A | 32 | 19.39% |

| B | 94 | 76.36% |

| C | 37 | 98.79% |

| D | 2 | 100.00% |

| Headphone Rank | Count | Top % |

| A | 28 | 16.37% |

| B | 78 | 61.99% |

| C | 50 | 91.23% |

| D | 14 | 99.42% |

| F | 1 | 100.00% |

Per usual, the data speaks for itself. The median for both ranking lists is “B”, which is the second highest grade on the MajorHiFi scale. Getting either of the two grades (A or B) puts you at the top 76% and top 62% of the IEM and headphone ranking lists respectively, which can mean anything from “best of the best” to “below average”.

On another note, assuming that the “E” grade isn’t applicable here (it’s not being used after all, can’t blame me for the assumption), this A-B-C-D-F grading system is no different from a 5-star rating system. Which brings into question why even use it over the more common latter in the first place…

Look, if you’re going to rip off the audio ranking list concept (even down to separating it by IEMs and headphones and adding a separate value rating)… at least make it normally distributed too.

TL;DR

You gotta read between the lines.

WhatHiFi: Statistically, only 5-star awards are worthy of consideration.

SoundGuys: A score of 7.5/10 is statistically average, and only those rated 8/10 are truly significantly ahead of the pack (top 25%).

Headfonics: Virtually nothing is rated under 6/10, and the average is set at roughly 8.4/10. The worst of the bunch in terms of rating skew and bias.

Headphonesty: The best of the bunch, but still terrible. 4/5 stars is the average rating, so really only those rated at 4.5 stars and above are worthy of consideration.

MajorHiFi: Distribution puts a “B” grade at the median, with the highest grade being “A”. Statistically speaking, only A-rated models are worthy of consideration.

And for those interested in the distribution and statistics of my own ranking system…

The Update Where 393 IEMs Get Added to the Ranking List

The long awaited update where nearly 400 new IEMs get ranked, bringing the total to 886 entries.

The Update Where the Headphone Rankings Get Shuffled

The long awaited update where 46 new headphones get ranked, along with a big overhaul of existing entries.

Support me on Patreon to get access to tentative ranks, the exclusive “Clubhouse” Discord server and/or access to the Premium Graph Comparison Tool! My usual thanks to all my current supporters and shoutouts to my big money boys:

“McMadface”

Timmy

Man Ho

Denis

Alexander

Tiffany

Jonathan

34 thoughts on “Why (most) Ratings Suck: An Analysis”

I’d be extremely curious to see the plotted correlation between price and rating. Of course, ideally lack thereof. Too often I suspect reviewers are unable to stay indifferent to the price.

What about sampling bias? Aren’t decent sounding headphones statistically more likely to get selected for reviewing/ranking process either by popular demand or by drawing attention from supposedly at least somewhat knowledgeable reviewers? Most other review sites don’t rank gear they don’t review and that presents a significant investment of time likely to bias the sample to include the gear they like. A distribution heavily skewed to the right is actually what I’d expect to see unless you’re going out of your way to randomize your sample. Perhaps you are, or perhaps you think if that’s the case they should have normalized the ones they tested anyway, but I feel that caveat should be made clear before comparing ratings with incomparable sample biases.

THIS. Most reviewers in this hobby are just hobbyists. They choose to spend time with gear they like or are interested in. Even folks like SG tend to ignore 2-channel gear they don’t like. I’m not sure if any analysis can be done that doesn’t take that into account.

YES, THANK YOU. Even outside of audio it’s pretty much a given that a lot of reviewers don’t want to make negative content because people are looking for what’s actually good.

Sure, negative reviews help people know what NOT to buy (GamersNexus is a great example of this) but even then reviewers are incentivised to talk about the good stuff and not the bad.

I recall Chris of WearTesters saying multiple times over the years that he doesn’t put out wear test reviews on shoes he doesn’t like because it bums him out and also doesn’t do as well as the typical reviews.

*slow clap* This is the outcome when the sites rely on manufacturers providing samples for an “unbiased opinion” in order to have content for said site. If you call something garbage, you risk upsetting the manufacturer and cutting off your pipeline of future products to review. As a result, everything skews positive.

+1 – this and only this, is the main trend for all the (mainly) positive 5* reviews at the moment and this articles observations. I’ve lost count of the times lately where I have purchased something because of a very positive review and then wondered what all the fuss was about. I don’t take hardly any notice of these sites anymore but carefully look at all of the follow up comments and mini reviews from people who have actually paid money for a product but even then the ‘hype train’ can’t be ignored….. always try and test things for yourself first and where it’s difficult or you can’t, then make sure you purchase from a trusted retailer with a good returns policy – remember most of these reviewers gear is FREE – yours isn’t.

Basically, RTINGS.com is king, all subjective/unmeasured technicalities be damned! But seriously, everything is a beautiful standardized graph there, (and there’s a lot of them) what could be better?

And if you can also find the same gear on reference-audio-analyzer.pro for those extra Delta QSD, impulse and square wave graphs, then you are in measurement heaven.

Not to disregard this site, of course – I always check the only graph provided here, too, from what I’ve read a lot of thought and testing has been put into it, therefore trustworthy. Graph comparison is awesome, too. The reviews and rankings have less veracity imho, but the ranking table and the short comment in it is much more interesting to look at than only long reviews as it is elsewhere.

As to the differences in statistical distribution, I have three potential explanations:

a) the aforementioned sites are either dishonest or mistaken, unlike IEF;

b) unlike IEF, others only select to review&rank things that are more likely to be good, not just every other random chi-fi wonder in the known universe;

c) all of this is bs – just choose any cheapo chi-fi that is not known to be uncomfortable, with poor QC or obviously distorting and/or grainy, then fix the sig with eq to your liking and you’re good to go.

Personally, I like options b or c best.

Stop recommending website that only covers commercial products. I took a look at that website, it was all Sony and Bose. Your comment is the exact reaction of “touche” it hurts because your recommended site follows exactly “why it sucks”

Although I agree that most tier lists generally suck, I think you’re making a mistake in assuming a known underlying distribution to headphone / iem / speaker / audio device performance. Since there is no objective way to observe the quality of these devices, we also have no way of measuring, quantifying or counting it, ergo also no way of determining the type of the distribution. Therefore a tier list which is entirely skewed to the upper registers of ranks is just as valid as a tier list which has had the reviewer fit an artificial normal distribution – they’re both entirely subjective and ultimately entirely devoid of any grounding in scientifically evaluated evidence.

TLDR: Tier lists must follow a normal distribution, says who?

I think you missed the point of this article; it’s not necessarily about conforming to Gaussian but rather about ensuring that ratings have meaning (though a Gaussian distribution does certainly help with that, it’s more a means to an end than anything).

Imagine the extreme case: a site rating headphones on a 5-star scale but everything they rate is 5-star. The rating is therefore meaningless, even with subjectivity involved.

Now again that’s the extreme case, but now apply that to the examples that I’ve listed in the article. They didn’t rate everything the highest score, yes, but they’ve gotten close. And therein lies the problem, “meaning” is essentially stripped from what may be considered a “high score” when in fact it could merely be average in the context of everything else that they’ve rated. Aiming for a normal distribution is one solution (arguably the best solution), but realistically all I’d ask for is less bias and skew. That’s not a big ask, is it?

In my opinion the biggest problem with ‘meaning’ in these lists is not whether 80% of all devices are ranked between 7-10 on a scale or not. Perfectly reasonable to argue that 80% of popular audiophile devices range from very good to amazing, just as it’s perfectly reasonable to argue the opposite. There is no “real” answer to questions of subjective taste such as this. As long as the reviewer / site creating the list is honest in doing so, I don’t see a problem with it.

Which leads me to my actual point. I find it highly unlikely that the majority of these websites aren’t in some way influenced by third parties, rendering their tier lists little more than an exercise in applied marketing. Therefore they are tier lists which are rather useless to anyone except for the entities involved with creating them.

So in conclusion, it is not the shape of the distribution which is the problem, but rather the fraudulent motivation behind reviewing products in the specific manner which leads to a particular, often times heavily skewed, distribution.

> a tier list which is entirely skewed to the upper registers of ranks is just as valid as a tier list which has had the reviewer fit an artificial normal distribution

If that true, of course that any kind of ranking and grading would be meaningless, arbitrary, worthless and useless. But If you declare that almost all iems you review are worth 5/5 stars because “that’s just a subjective conclusion”, the burden is on you to show that delta distribution is just as reasonable, legit and valuable as crinacle’s more gaussian distribution.

A distribution that looks more like a delta distribution provides little to zero valuable information, unless you can show that’s the product of sampling bias where most of the products they review are equally excellent . But to establish that biased selection of excellence you had to compare with a sea of mediocrity to begin with. Why wouldn’t they include that mediocre population in their rankings? If they just won’t, what’s the point of grading products with stars or points?. Just say they are all great compared to mediocre stuff that you won’t even mention. So the stars and points grading system would be superfluous at best. But this is unlikely the case since these sites review dozens or hundreds of products. It would unreasonable to expect that a large sample of random stuff is equally great. That alone would be an unreasonable claim that, if true, would make the market implode with deflation. In reality, a relatively large sample of random stuff naturally follows a more gaussian-like distribution with some standard deviation. This is a more reasonable outcome that we can expect.

“But to establish that biased selection of excellence you had to compare with a sea of mediocrity to begin with. Why wouldn’t they include that mediocre population in their rankings?”

There is no objective way to determine what is and what isn’t mediocre.

“Just say they are all great compared to mediocre stuff that you won’t even mention. So the stars and points grading system would be superfluous at best.”

Is it superfluous to say that most well known headphones and iems perform from good to excellent? And that there are hardly any “bad” products out there? I don’t think so.

“It would unreasonable to expect that a large sample of random stuff is equally great.”

Since there is no objective way to determine what is “great” there is also absolutely no objective basis to assume any specific pattern of variation in the data.

“It would unreasonable to expect that a large sample of random stuff is equally great. That alone would be an unreasonable claim that, if true, would make the market implode with deflation.”

Nobody is assuming that all these products are “equally” great.

“In reality, a relatively large sample of random stuff naturally follows a more gaussian-like distribution with some standard deviation. This is a more reasonable outcome that we can expect.”

The fact that a normal distribution is a possibility does not mean that it is in fact a normal distribution. Completely baseless assumption.

> Is it superfluous to say that most well known headphones and iems perform from good to excellent? And that there are hardly any “bad” products out there? I don’t think so

If you can make the distinction between mostly good, excellent and some very bad stuff then you are already describing a broader distribution of mediocre stuff followed by smaller proportions of ‘excellent’ and ‘bad’ stuff

I don’t see a possible nor meaningful workaround to that.

That if we understand that ‘excellent’ means “well above the majority” and very bad as “well below the majority” in terms of quality and merits. While the rest is just good, that is, mediocre.

By definition alone that should tell you what kind of distribution you should get. Whether you can make subjective or objective reviews seems irrelevant to this

Idk about USA and wherever you’re from but in a lot of the world you need a grade above 6 to pass, so in a way yeah it’s more like a 5 star system, but also sets minimum expectations in the sense that you can score a 2 or a 5, and it’s still a failing grade and not really worth consideration, which is why average is usually around a 7.5 or a C/C+ in your case…

If the population size is just large enough, the sampling bias argument to explain the skewed distribution won’t hold any water. The population doesn’t have to be that large for that to happen. A few dozen of ratings should be more than enough

It makes no sense whatsoever to expect that most products are that relatively good. Much less to conclude that!

Great article. Well presented point. I think there might be another reason for this skew in results.

I think exemplified ratings award half of the score based on the item just working. If it makes sound, than its not below 5/10. The quality of the sound is what might be in the other 5 points, but usually there are some other things like packaging and features. And since many items are packaged to some standart, they get a point for it. So yeah, this ratings do have 3/10 points used but the reason might be a bit different. Using this ratings purely for sound comparisons is of questionable usefulness

While I think you do a better job than these others. It would still be great to have a couple more measurements that affect overall performance like impulse response and phase response. Together they can help determine time domain and cohesiveness issues. We’ve all heard earphones that measure well in response and distortion that we just don’t care for. There’s a reason beyond preference and I think a site based strongly on technicalities would benefit from a couple more of them.

That said, keep up the good work.

Hello crinacle, SoundGuys does disclose their new measurements rig that they measure their headphones and earphones. All the newer headphones are tested with the Bruel & Kjaer 5128.They still have many measurement with the old rig on the website (unknown what it is) but it easy to distinguish between the two measurements. If the measurement is in black color, it mean that they use the new B&K 5128 rig, and if it use white and grey color it use the old rig. They wrote an article about it – https://www.soundguys.com/introducing-the-newest-soundguy-the-bruel-kjaer-5128-45973/ .

I was really surprised that they use the Bruel & Kjaer 5128 but I think that it is good choise, especially for over the ear headphones measurements .

Thanks for the update, I’ve updated my articles.

“though of course also critiquing the overly-positive vibe of many publications that may be leading our hobby to its downfall if not corrected.”

Kinda depends on the point of view, the hobby seems healthy enough, at least compared with the time of Headwize, and at least we have publications! 🙂

The main problem for the current downfall of the hobby in my opinion are some enthusiasts who don’t actually hear the gear and depend on lists/ratings which they use like some kind of bible to push “their” opinions, these days everyone is a headphone/IEM hobbyist even if they never tried the gear before. We have more enthusiasts, but their overall quality went down the drain.

Crinacle the problem in this hobby goes way beyond ratings…When was the last time you interacted anonymously in a community? Try it for a while, believe me it’s not pretty for a lot of newbies starting right now.

Best regards, always a pleasure reading your words.

Really nice article Mr.Crin, I can see your points and understand them perfectly, yet there is still something I am willing to ask in order to understand your view better:

Your issue with those websites is the fact that there are next-to-zero products that were rated badly, while the biggest % somehow ends up very high on their scoreboard.

My question here is that, based on ranks only, how different were those IEMs/headphones rated in comparison to your list? I haven’t ran through the data but could it be that the big portion of the products that were rated highly on those websites *happened* to be actually acceptable (if not great or better) in your list as well?

Additionally, I think you as a reviewer and a “veteran” in the scene, have a more complex and higher standard than many sites/individuals do (I think that’s one of your main selling points too). So despite the fact that those sites are supposed to be picky and selective, do you think it’s possible that they might just be more forgiving than you? I’m trying to understand both sides here, and one point I often hear people talk about you is that there are products you rated so low while many (newcomers or experienced listeners alike) may think that they were rated incorrectly.

Hope to hear your candid reply, thanks for reading!

Gah, there’s so much to unpack here.

I’ve certainly been guilty of this, but as another guy put it, the problem for me comes down to the products themselves.

I definitely agree with the point you’re making, in that, a star system is essentially an arbitrary tool if misapplied, but the question for me becomes,

What headphones would you truly rate below 3 stars? I’d like to know of them. I can think of maybe a handful of products that I thought were truly awful. Bowers and Wilkins P7 is one that stands out, but again, I’d have to really think about it if I were to make a list. The fact is that if we’re *only* judging sound alone, I think a star system could be more useful because it takes into account one specific metric rather than 5+.

The other piece to this is honesty. If those sites are being 100% truthful about their ratings, and that’s their honest opinion, then I don’t see the problem with it. The issue is that yeah, a couple of them probably aren’t (at least 100% of the time). There is so much subjectivity that comes into play, along with other factors that make up the full rating. Looking “good” to manufacturers and companies certainly could play a part in that, as well as enticing more clicks.

Even using a pass/fail system, how many products would fail? For me, it’s not that many. I personally believe the problem here isn’t one of the quality of said product per se, but of quantity.

This a huge issue with Amps & DACS specifically (and something I bitch about constantly), but also applies to headphones. There are simply too many products on the market, full stop. You’ve got all these companies vying for wallets that it becomes almost unethical to me in a sense. The catch of course is that most products manufactured in the audiophile sect are all actually pretty good. *cue revolver ocelot you’re pretty good* lol (This was also mentioned by a fella above).

This simple concept can be seen over and over the countless recommendations from random commenters on my channel, blog, or really any forum. Ultimately, there’s so much to choose from which results in lots of “good” products, subjectively speaking. So does it come down to taste more than anything? Perhaps, because at the end of the day, what are most people arguing about? A difference of opinion.

Even with all that said, I actually may get rid of my own star system because I think you’re making a great point.

In my opinion, it’s more about the content of the article and my impressions of said product that help someone make a buying decision rather than a # at the end which may not even coincide with what I wrote. I will give all those sites (even WHAT HIFI bleh) the benefit of the doubt in saying that it’s actually quite difficult to give a completely objective rating when you’ve just spent 2000+ words talking about something in a mostly positive light. It’s almost an afterthought. Highlight the good and bad, then rate it. I honestly think ratings in a way have become entirely unnecessary since you’ve just poured out hours of impressions onto the page, but also they’ve morphed into this weird sort of “cherry on top”. It makes the page look good, it forces the viewer to hone in on the pretty box. In essence, it’s a marketing tactic and nothing more.

The real value for me lies in comparing product A to product B, something companies don’t like when they send me stuff, but is one of the best ways to gauge if something is worth buying. They want a single review of said product so it will entice people to buy without really thinking about it or weighing anything. I don’t even have to ask them. They know and I know. It’s sort of unsaid.

I think moving forward the goal for everyone should be to just help others as much as they can while giving the most complete and in-depth reviews and guides possible. As you mentioned, Headphonesty is good about this and I’ve also had to re-evaluate some of my really old content and decide whether it was actually good or not, what to do with it (consolidate, merge, etc.), and how to improve moving forward.

That’s all I got for now. I’m tired lol.

dudee… tbf your rating was not that good either. you are without doubt one of the if not the most closed minded reviewer i’ve ever known. everytime i test a new iem, i was like “ahh….crin going to love this” or “Ok this $500 iem going to be placed far below starfield in crin’s ranking system” and so far i’ve never made a mistake in my guess, because your scoring system is so stoic that it goes awfully predictable. harman and DF Neutral certainly not the holy grail, and freq response is not a sole factor to determine the score of the so called “technical aspect” i know a lot iems which can obliterate KXXS in term of technical aspect such as separation, imaging, decay, transient, etc. But hey, because it ain’t harman you wrote it as “lack of technical”, i mean what technical did you mean? while i agree to some point that most website you mentions are too easy on giving good scores, but sometimes they still gave more versatile viewpoint on the stuffs they viewpoint

I don’t get your logic, so a reviewer is better if they’re unpredictable? If you don’t even know what they’re thinking?

If you want twists, go see a Shyamalan movie. Why are you even on my website.

“Why are you even on my website.”

In large part, this attitude is why I like this site. Classic.

I think he means maybe, that just like they only give fours or fives, you go the other way? by giving “threes” to stuff that might just as well be scored higher if you had a different taste (like to take an example, a very dark or bassy earphone or one that has detail but not the kind you prefer.. or that you value some traits over others or have a completely different frame of mind in regards to something, which can happen – every time you hear a certain aspect of a sound that is there u think x while someone else thinks y and the other person attributes importance to that thing, and the likes), that in some kind of weighted “objective view” things can maybe go past a certain treshold and it is good enough basically. and I dont think it is your fault but when reviews or ratings are taken as gospel as you yourself tell others not to take it, when it is earphones, and not art, book reviews or film, it doesen’t seem great when taste puts something in “average” or “ok” when it can be someone elses “favorite”, and unlike here usually when that happens f.e with film reviews it is pretty apparent for anyone so there is a big difference. no one is gonna overlook men in black if someone rates it a 3, but they most likely will with an iem that may be worth trying. and for diversity and just for whats constructive or fair in the world and also what it can lead to ultimately when things are deemed inferior and overlooked, in the long run.

I mean, I get what Crinicle is saying ,that you would expect a normal distribution for a selection of reviews, but I don’t think it’s that simple.

For example, let’s say you gave a multiple-choice test to a bunch of students. And the students had done their homework or whatever. And the average grade was a “B”

You’d say well that’s not normally distributed, the average should be “C”. But it’s not. Because the students did the homework.

Like I remember going to Audio46 and trying a whole slew of headphones they had there and I wouldn’t describe a single headphone I tried as “bad”. So it depends what you’re grading for.

If I said all the headphones I tried were pretty good, then you’d say well you’re a biased shill or something, but no, they were all pretty good. Some I liked better than others, but that doesn’t mean they deserve 1 star or an “F” grade just because they’re not as good as the other good headphones.

So I don’t agree with Crinicle’s take here at all

Education has a bar to pass, which does not solely mean that the students are remarkable. Sure they pass or did well, but if they join competition, they may or may not perform as they are expected since, well, its a competition, only the best wins.

Same case in making competitive products are not that simple.

What is the point of releasing broken product to get reviewed in the first place and be set to 1-5 in a lets say grading system that Top 75% is at the higher grade (Example: 8-9) meanwhile we only have limited amount of resource that most people will only be able to afford one or two products.

Rating systems are there to help shortlist these products, since in no way most of the people would invest in same grade products if the products are to be placed at almost the peak of the rating system. (P.S: the products are categorically the same, if you may)

I agree with your point, having to follow a distribution curve actually makes grade ratings a bad representation.

A measurement of rating should be able, is to let us know how much better (quantitative), one product is compared to another. Having a nominal distribution only tells us the top percentile.

An example of how it can be flawed is as shown below.

For 5 IEMS, I scored them between 9.X/10. “X” is a variable that starts from 5-9.

Having to distribute them in a nominal distribution of (A-E, 20% Per cat) means.

The 9.5/10 IEM would be Grade E.

The 9.6/10 IEM would be grade D.

The 9.7/10 IEM would be grade C.

The 9.8/10 IEM would be grade B.

The 9.9/10 IEM would be grade A.

At first glance, without looking at the scores, one would think that a grade A vs grade E IEM would be a huge difference in terms of sound quality. However, note the gradings are actually a representation for its percentile and not performance due to nominal distribution. These IEMs are actually pretty similar in performance, as seen in the scores.

This is a simple and straightforward explanation of how it can be skewed mostly due to dilution in particular regions, which is not uncommon for IEMs, and needs no further explanation.

I think if you expect review scores to be normally distributed, you assume manufacturers make products with random quality targets. Obviously, that’s not the case, they try to make the best product they are capable of making at a defined bill of materials level. Hence the distribution of scores should in fact be biased to the right. And that bias will increase as part production is more and more outsourced/standardized.

If you were to use narrow categories of products, then, maybe, review scores would be more normally distributed. Yet, in that case, I find that minor quality differences end up being magnified artificially.

Now, the elephant in the room is something else: Most online reviewers will give a “average/passing/standard” grade to most products because they do not want to be black-balled by manufacturers and risk the flow of products they receive at no charge for review. Therefore they couch their reviews using the “this product is good; but this product is better” formula and their scores reflect that.

But they don’t review stethoscopes found an aeroplanes, do they.